Faces as Biomarkers, Children as Datasets

Turning Consent Into a License, Turning Faces Into Data

Turning Consent Into a License, Turning Faces Into Data

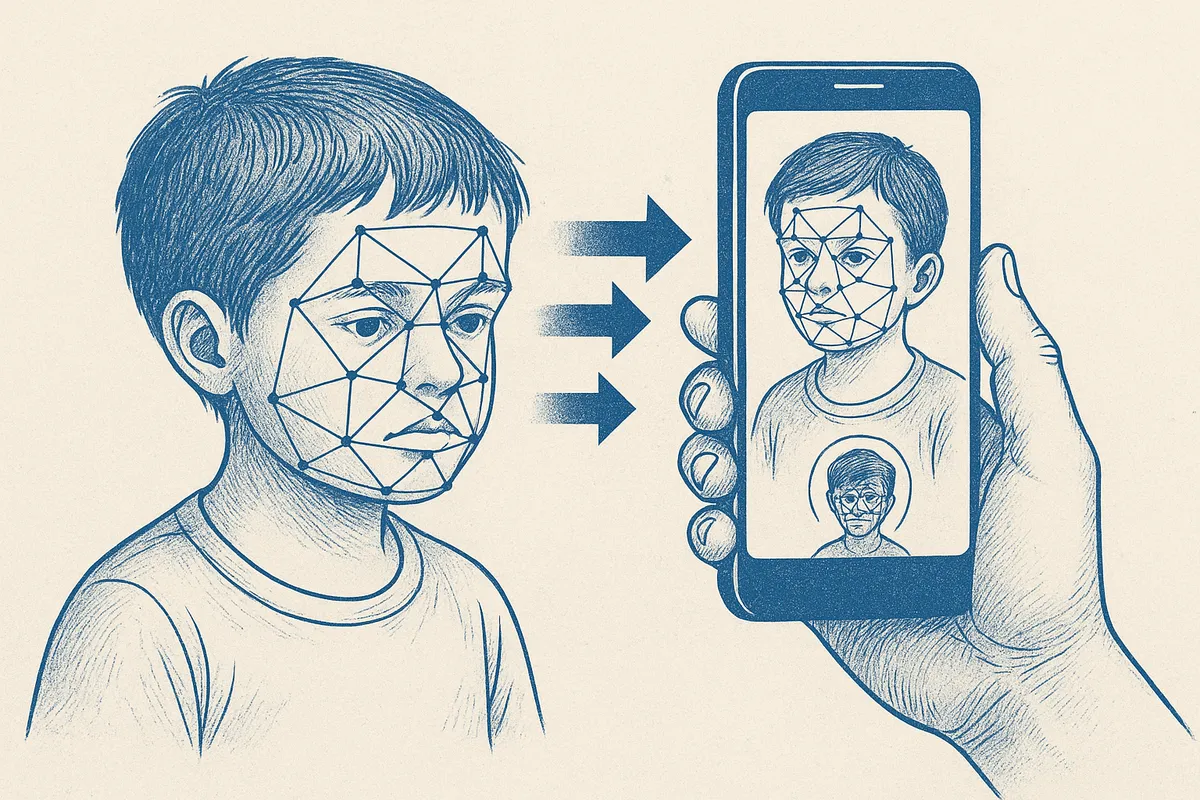

A new article in Scientific Reports (Alkhawaldeh et al 2025) offers a machine that claims it can see autism in a child’s face. The architecture is dense with jargon — attention layers, residual blocks and BiLSTMs — yet the core claim is blunt. Feed in thousands of child portraits, then extract patterns and... Voilà! Predict autism almost instantly. Their dataset: the so‑called Autism Facial Image Dataset (AFID), nearly 3,000 child faces scraped from the internet and released under a Creative Commons license. Their promise: a smartphone app that lets a pediatrician or a parent point a camera and get a diagnosis.

Pathology as Default

The paper rehearses the autism-as-deficit script, recycling the same tired framing used in countless studies before it. Autism described as neurological disorder. Deficits in cognition, motor skills, social interaction and repetitive behaviors. Prevalence numbers flagged as crisis. The villain is diagnostic delay. The hero is automation. No space given to autism as culture, identity or divergent way of being.

Consent by License, Not by Person

The authors pause to acknowledge sensitivity. Then they invoke a license. Because the AFID dataset is released under a CC0 public domain dedication, they treat it as fair game. Consent becomes a matter of paperwork, not people. Children’s faces, mostly European and American, are training material. The question of whether anyone wanted their image used this way is never asked.

Accuracy and Its Shadow

The model posts statistics: about 95% on a statistical test called the area under the ROC curve — basically, how well the system separates autistic from non‑autistic cases — and accuracy above 87%. The numbers shine. But what do they conceal? What happens to a child falsely flagged by a machine? What about those missed entirely? What will schools, doctors, insurers or governments do with a technology that claims to read autism off a face? Metrics are not neutral — they are levers of power.

Toward Surveillance, Not Support

The dream spelled out is deployment. A cheap tool, accessible and mobile. A parent with a phone, a community worker with a tablet, a clinic with an app. All able to scan children for autism in minutes. This is presented as progress toward early intervention. But the path it sketches is different. Screening without context. Labels delivered without dialogue. Autistic life collapsed into a probability score.

Our Takeaway

Alkhawaldeh and colleagues call this early diagnosis. We call it biometric policing of childhood difference. Childhood faces are turned into datasets. Consent is displaced by copyright. Autism is recast as a defect to be spotted on sight. The technical triumph is not the point. The point is what happens when an algorithm claims the right to tell you who you are. Accuracy is not the measure here. What is at stake is the erasure of autistic personhood and we won't stand for it.